home

overview

research

resources

outreach & training

outreach & training

visitors center

visitors center

search

search

home

overview

research

resources

outreach & training

outreach & training

visitors center

visitors center

search

search

home

overview

research

resources

outreach & training

outreach & training

visitors center

visitors center

search

search

home

overview

research

resources

outreach & training

outreach & training

visitors center

visitors center

search

search

3d Planetarium Dome Movies

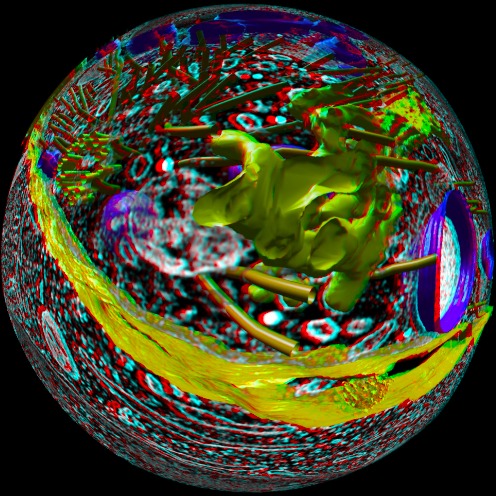

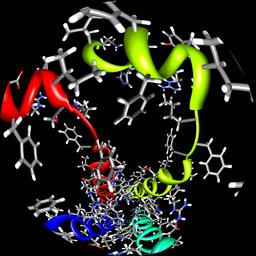

Human T-cell and HIV virus stereoscopic dome projection shown in red-cyan anaglyph mode.

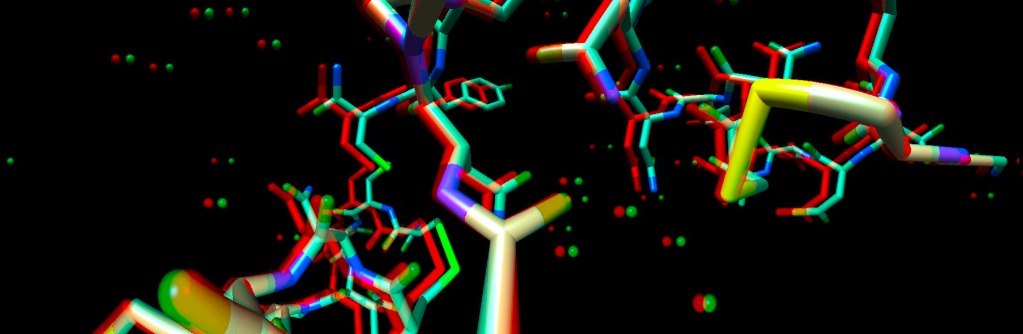

Influenza virus proton channel dome projection, left eye view using moving camera technique. Introduction

The Imiloa Astronomy Center in Hilo, Hawaii installed the first 3-d planetarium in 2007. They project left and right eye images and viewers wear notch filter glasses to perceive depth. Dome display can put the viewer inside a 3-dimensional scene, providing a sense of immersion not possible with the narrow field of view provided by flat screen projection. We've tested a new technique for 3-dimensional dome rendering that allows depth perception when looking in any direction, not just the forward direction.Product

We added dome rendering to UCSF Chimera including the ability to capture left and right eye images offset from the center of the dome hemisphere. Using fixed camera positions for the left and right eyes does not give correct depth perception if the viewer in the dome turns 90 degrees or 180 degrees from the forward direction because the viewer's eyes will not correspond to the camera positions used in the rendering. To allow the viewer to see depth while looking in any direction we tried a rendering method where each vertical image strip on the dome is rendered using left and right camera positions side-by-side and perpendicular to that strip direction. In other words, each vertical strip of the dome image uses different camera viewpoints. Capturing and combining the strip images was done with a Python script panorama.py.Evaluation

We rendered videos using the fixed and variable camera position techniques and viewed them at the Imiloa planetarium. Left and right eye dome master images for each video frame were made at size 4096 by 4096 for 30 frame per second playback, with 0.6 degree parallax for the 16 meter dome. These were "sliced" for the Imiloa dome by Shawn Laatsch, director of the planetarium. For the fixed camera views we flew over segmented human T-cell electron microscopy data ending at an HIV virus particle. For the variable camera position method we placed the viewer inside an influenza virus proton channel (PDB 2kih). The variable method required rendering 12000 images (circumference of a circle with 4096 pixel diameter) for each frame requiring about 30 minutes per frame. To reduce computation time we rendered just one frame (left, right) and then rotated it around the dome zenith by 360 degrees in half degree steps for our test video.To preview the variable camera position technique using flat screen display we also unrolled 360 degree cylindrical projections for panoramic viewing.

Conotoxin protein PDB 1a0m shown with 360 degree cylindrical projection using moving eye camera positions and red-cyan anaglyph display.Results

Viewing our test animations at the Imiloa planetarium gave good depth perception for forward viewing using the fixed camera position stereo method. The 120 seat planetarium has all seats facing in one direction. Turning to look 90 degrees left or right from the forward direction gave poorer depth perception but did not completely eliminate it, apparently because the brain extrapolates the depth information from the forward direction towards the sides even when the eye images have no parallax when viewed 90 degrees to the side. Turning 180 degrees makes the viewer's left eye see the rendered right eye image -- the eye positions are swapped relative to the fixed rendering positions and the depth is inverted and confusing.With the variable camera position technique the depth perception was good when turning to view in any direction. With this technique the rendered image seen in the viewer's peripheral vision should show distortion since peripheral regions used camera positions differing from the actual viewer eye positions. Peripheral distortion was not apparent.

An advantage of the fixed camera position rendering is that tilting your head back gives good depth perception at the top (zenith) of the dome, which is not the case for the variable camera position method. So fixed camera position works well for forward and up/down viewing while variable camera position rendering works well for viewing the horizon 360 degrees around.

Recommendation

The variable camera position stereoscopic rendering can put the viewer in the middle of a molecule. But the rendering time is thousands of times longer and a minute of video at 30 frames per second would take about 1 month to render on a single computer for our test case. A direct comparison of the fixed camera and variable camera techniques using identical scenes would give a better sense of the merits of the two rendering approaches.

Laboratory Overview | Research | Outreach & Training | Available Resources | Visitors Center | Search